In predictive modeling, accuracy is often the primary focus. However, understanding and quantifying uncertainty is equally crucial in high-stakes domains like healthcare, finance, and autonomous systems. Making decisions without considering uncertainty can lead to severe consequences. Quantifying uncertainty in predictive models improves decision-making and builds trust in machine learning systems. Enrolling in a data science course in Pune is highly recommended to gain expertise in this critical area, as it offers comprehensive training on advanced machine learning techniques.

The Role of Uncertainty in Predictive Modeling

Predictive models generate outputs based on patterns in historical data. However, these predictions are only sometimes precise due to data variability, model assumptions, and unknown factors. By quantifying uncertainty, data scientists can provide a range of potential outcomes rather than a single deterministic prediction.

For instance, a healthcare model predicting patient recovery time should include a confidence interval to reflect the uncertainty. Such capabilities can be developed through a data science course in Pune, which emphasises both theory and application.

Types of Uncertainty in Predictive Models

Uncertainty in predictive modeling can be broadly classified into two types:

1. Aleatoric Uncertainty

This type of uncertainty arises due to the data’s inherent randomness. It cannot be reduced, even with more data. Examples include variations in patient responses to treatments or fluctuations in stock prices.

2. Epistemic Uncertainty

Epistemic uncertainty arises from a lack of knowledge about the model or data. It can be reduced by improving the model or collecting more data. For example, a model trained on a limited dataset may exhibit high epistemic uncertainty.

Learning to distinguish and quantify these uncertainties is fundamental to a data scientist course. It enables better decision-making in real-world scenarios.

Methods for Quantifying Uncertainty

1. Probabilistic Models

Probabilistic models like Bayesian regression quantify uncertainty by modeling the output as a probability distribution rather than a single value.

2. Bootstrap Sampling

Bootstrap sampling involves resampling the training data multiple times and training the model on each sample. The variability in predictions across these models represents uncertainty.

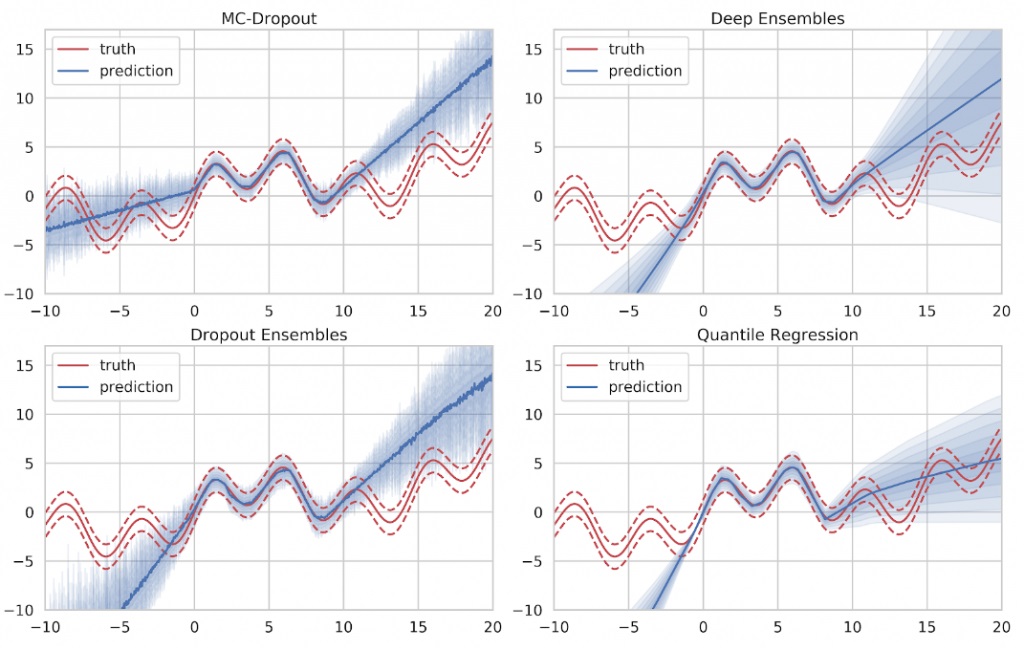

3. Monte Carlo Dropout

In neural networks, Monte Carlo (MC) dropout applies dropout layers during inference to simulate predictions from multiple models. This technique is widely used for estimating uncertainty in deep learning.

Practical exercises on these techniques are integral to a data scientist course, ensuring hands-on proficiency.

Tools for Measuring Uncertainty

1. Confidence Intervals

Confidence intervals provide a range within which the true value will likely fall, offering an intuitive way to communicate uncertainty.

2. Prediction Intervals

Prediction intervals are similar to confidence intervals but include uncertainty from both the model and the data.

3. Uncertainty Metrics

Metrics like entropy and variance can quantify uncertainty in classification and regression tasks.

Using these tools effectively is a skill honed through a data scientist course, equipping students with the ability to implement robust predictive systems.

Applications of Uncertainty Quantification

1. Healthcare Diagnostics

In medical imaging, quantifying uncertainty helps radiologists interpret model predictions, ensuring critical conditions are not overlooked.

2. Financial Risk Management

Predicting stock trends or credit risks becomes more reliable when uncertainty is quantified, allowing for better risk management strategies.

3. Autonomous Vehicles

Understanding the uncertainty in object detection models in self-driving cars ensures safer navigation by accounting for ambiguous situations.

Such high-impact applications underscore the importance of training in uncertainty quantification, which is a core part of a data science course.

Challenges in Quantifying Uncertainty

1. Computational Complexity

Many uncertainty quantification methods, like Bayesian inference, are computationally intensive and require advanced algorithms.

2. Interpretability

Communicating uncertainty to stakeholders in an understandable manner can be challenging, especially for non-technical audiences.

3. Data Limitations

Limited or biased data can lead to inaccurate uncertainty estimates, impacting decision-making.

Addressing these challenges is a critical aspect of a data science course in Pune, which focuses on practical problem-solving in uncertainty estimation.

Building Robust Decision-Making Systems

Quantifying uncertainty is not just about improving predictions; it’s about creating decision-making systems that can withstand uncertainty. Robust systems consider uncertainty to minimise risks and optimise outcomes.

For instance, in predictive maintenance for industrial machinery, a robust system will account for uncertainty in failure predictions, ensuring timely interventions and cost savings. Such systems are designed using principles taught in a data science course in Pune, which bridges theoretical understanding with real-world applications.

Case Study: Predictive Modeling in Healthcare

Consider a predictive model designed to estimate the survival rate of patients undergoing a specific treatment. Without uncertainty quantification, the model might predict a survival rate of 80{e9f8ace5bfa766d2850e172a49d3a54d5b0266ad4648cbd3b0f176d1bd1f90fb}. However, adding uncertainty information might reveal a 70{e9f8ace5bfa766d2850e172a49d3a54d5b0266ad4648cbd3b0f176d1bd1f90fb}–90{e9f8ace5bfa766d2850e172a49d3a54d5b0266ad4648cbd3b0f176d1bd1f90fb} confidence interval, providing a more nuanced understanding.

This additional information enables healthcare professionals to make informed decisions and discuss potential outcomes with patients. Training on such real-world case studies is a highlight of a data science course in Pune, ensuring practical readiness.

The Future of Uncertainty Quantification

As predictive models become more integrated into high-stakes domains, the demand for uncertainty-aware systems will only grow. Emerging trends like explainable AI (XAI) and probabilistic programming are pushing the boundaries of what is possible in uncertainty quantification.

By staying updated through a data science course in Pune, aspiring data scientists can position themselves at the forefront of this exciting field, contributing to innovations that transform industries.

Conclusion

Quantifying uncertainty in predictive models is essential to building robust decision-making systems, especially in high-stakes domains. It provides a deeper understanding of model predictions, enabling stakeholders to make informed decisions and mitigate risks. Techniques like probabilistic modeling, bootstrap sampling, and Monte Carlo dropout empower data scientists to estimate uncertainty effectively.

Incorporating these skills into your professional toolkit is best achieved through a data science course in Pune, which combines theoretical rigor with practical applications. Whether you work in healthcare, finance, or autonomous systems, mastering uncertainty quantification will enable you to create accurate, reliable, and trustworthy predictive models.

Business Name: ExcelR – Data Science, Data Analytics Course Training in Pune

Address: 101 A ,1st Floor, Siddh Icon, Baner Rd, opposite Lane To Royal Enfield Showroom, beside Asian Box Restaurant, Baner, Pune, Maharashtra 411045

Phone Number: 098809 13504

Email Id: enquiry@excelr.com